Multi-sensory UIs need multi-sensory designers

Until recently, design focus has been primarily on screen-based experiences. But there is a growing distemper with this ‘pictures under glass’ mentality. More meaningful, human interactions can be achieved when additional senses are engaged. Although the technology to enable these alternative interactions has been around for a while, they are only slowly finding their way into mainstream devices and applications outside of gaming. In addition to new tools and design principles, we need multi-sensory people to create meaningful, yet functional, experiences.

Some of the most innovative sensory interactions can be found in gaming devices. Gaming is ahead of the curve compared with regular consumer devices for the following reasons:

- It is better suited to novel, challenging control where you are rewarded for skill and progressive learning is part of the attraction.

- Users tend to be totally focused on the task of gaming.

- Game controllers can get away with being heavy or oddly shaped.

Great games systems can deliver a deeply emotional user experience. An entire games system can be built around a novel interaction (e.g. Wii or Kinect). In other consumer devices, it is likewise tempting to put interaction centre-stage as a key selling point of the product. This is understandable given the additional design and engineering effort required to build multi-sensory experiences. But history has repeatedly shown that, such novel interactions must quietly and intuitively blend into the greater purpose of the product.

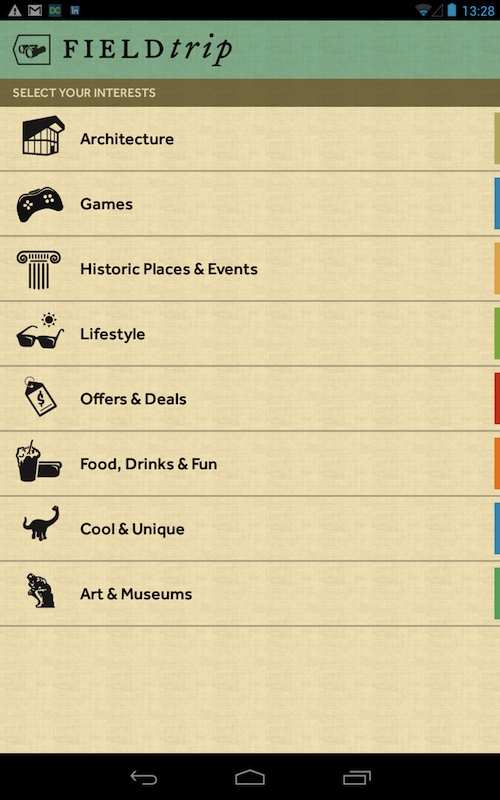

One common situation that can benefit from non-screen interactions is navigation. A user finding his or her way in a busy, sometimes loud, public space, will be distracted by the surroundings. This is a totally different context from a gaming system or TV at home, the user needs clear, private feedback and simple, forgiving control. Google’s Field Trip app applies these principles in an interesting and subtle way: when you are near an interesting location, it alerts you with a vibration, then briefly describes the location in your headphones.

Sensation has been on the MEX agenda for a while, in particular when used to supplement ‘inhibited activities’, when the user isn’t fully focused on the screen. At the MEX Event in September 2012, Charlotte Magnusson presented Haptimap: a set of tools and guidelines for developing multi-modal navigation systems (watch the video of her MEX Session here). Sophie Arkette experimented with audible and haptic feedback devices to give financial traders an edge on the trading floor – she found that quite rich information and alerts could be transmitted if preceded by a ‘meta signal’ to prepare the user.

But tools and principles are not enough, we need design decision makers who are able to think in these additional dimensions of sound, feeling and gesture. The growing realisation that there is life beyond the screen will hopefully encourage designers to dust off their physical interaction skills (I know you learnt them at uni!) and take on the challenge of creating more meaningful sensory experiences.

The work of the MEX initiative continues in this area next week at our March 2013 MEX event, where several speakers will address Pathway #9 and a working session will explore multi-sensory tools which allow audible, haptic and visual interactions to be prototyped in the same environment. Other questions to be explored included:

- Have today’s smooth, glass touchscreens caused designers to forget the senses of sound and tactility in interaction sequences?

- Which digital experiences are uniquely enabled through audible and tactile elements?

- Which sounds and feelings are innately suited to digital interaction? Is there a need for common palettes of audible and tactile elements?

- How should visual, tactile and audible elements adapt in response to changes in the ambient environment? How should the priority afforded different senses change in response to context?

- Does the success of audible and tactile interactions increase when users have been alerted to expect them?

- How can design process evolve towards parity for audible, tactile and visual elements?

The next MEX is in London on 26th – 27th March. Register for the last remaining tickets (£1499) here.

+ There are no comments

Add yours