Summary from MEX/16: new skills for a changing world of experience design

This much was clear from MEX/16: we must prepare for a future quite different from today’s world of smartphone primacy.

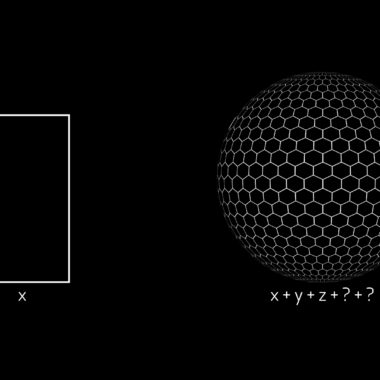

Emerging technologies such as virtual reality, artificial intelligence and multi-sensory interfaces are changing the skills required for good user-centred design. The impact will be felt throughout, from organisational strategy to the crafts of interaction design and user research.

Although the agenda for the 16th international MEX in London had been designed in advance around these assumptions, I was unprepared for just how rapid the change could be and how significant its implications.

We started with a simple and bold premise: if one continues limiting oneself to established experience design techniques one at best stands still and at worst risks irrelevance. Instead, our conference mission was to uncover hidden, less travelled paths towards a future of experience design five years hence.

The community was diverse: from agency CEOs to those leading experience design in-house at global brands; industries ranging from healthcare to financial services; practitioners spanning copyrighting, motion design, user research and brand management. This diversity informed and elevated the working sessions, equipping the combined gathering to see beyond the echo chambers of narrow roles or industry silos.

It is impossible to cover all of the valuable insights from 2 days of intense collaboration. Instead, I’ve picked five moments you might find especially thought provoking:

- How does your app smell, taste and sound? Tom Pursey and Peter Law of Flying Object took us on fast-paced journey through pioneering work they’d done for the internationally renowned Tate Gallery in London. Their project engaged Tate visitors in a multi-sensory experience inspired by four paintings, combining elements of taste, sound, smell and tactile effects. At MEX/16, their workshop challenged participants to examine how emerging technologies like VR and haptics might broaden digital experience design. Their methods of breaking experience down into its elemental, sensory building blocks and identifying unexpected pairings of colour, sound, tactility and motion were inspiring. This isn’t about a clichéd path to ‘smell-o-vision’, but rather a wake up call: digital experience teams which limit themselves to the narrow sensory dimension of visual effects will soon be left behind in a world already changing with Apple’s 3D Touch, voice UIs like Amazon’s Alexa and full body experiences such as the HTC Vive.

- Why does the bullshit gap persist? UX, digital transformation, user-centred design…there’s never been more lip service paid to these terms. Yet despite this, and budgets being at an all time high, the ‘bullshit gap’ – the difference between what organisations say and do – remains painfully and obviously wide. Giles Colborne, managing director of cx partners and someone who has literally written the book on usability, asked participants to consider the most effective strategic tools for closing this gap. Citing an example of work they’d done for the financial services organisation Nationwide, Giles shared methods for understanding culture, quantifying appetite for different types of change and adapting project strategy accordingly so as to deliver measurable impact. It brought into focus a new role for agencies – and the new skills they need – as they become educators for increasingly large in-house teams.

- When does artificial intelligence deliver meaningfully better UX? Understanding and responding to user behaviour at scale through machine learning has the potential to open up whole new areas of digital experience. In the case of Ed Rex and Jukedeck, it means they’re able to compose music on-the-fly to suit the mood of any video or moment. However, the nebulous term of ‘artificial intelligence’ is far from a panacea. Ed explained the practical challenges around processing speed, interface design and managing user expectations which they’ve had to overcome to ensure Jukedeck’s smarts feel magical to users. It left me wondering how service providers of all kinds will identify the balance between digital elements which thrive on dynamic, artificial intelligence-powered response and those which benefit from remaining static, immovable totems of the brand?

- Is the day coming when we can all experience the same programmable emotion in the same instant? Apala Lahiri Chavan, President at Human Factors International, shared her deep experience of conducting user-centred design across diverse cultures. She asked us to consider a multi-decade timescale of behavioural change and the influence of digital. If virtual reality and other forms of emotionally engaging digital services continue to advance, we must accept the possibility of an increasingly direct channel between digital providers and users’ most powerful feelings. At the fringe of technological advance, transhumanists are already experimenting with ways of augmenting their physical movements and emotions through digital devices. Too much, too soon…or too significant to ignore?

- How do you find the hidden truths which illuminate user research? Aaron Garner, Director at the Emotional Intelligence Academy, talked about his work with security services around the world, employing an understanding of micro-expressions to gain deeper insight into human behaviour. These flickers of movement, which might occur for just a fraction of a second, provide a glimpse of a users’ true feelings before they begin to modulate their response according to social context and expectations. In Aaron’s day-to-day work, this might mean detecting a security threat or averting violence, but the lessons are equally applicable to user research. No matter which new technologies emerge on the interaction side, improving understanding of user behaviour is fundamental to improving overall experience design. Aaron’s engaging talk, where he had all of our MEX participants contorting their faces into amusingly amplified versions of micro-expressions, showed how effectively tangential learnings can be utilised to improve the practice of experience design.

The process of distilling all of the results from MEX/16 continues. I’ll share additional posts here.

In the mean time, you can also listen to a special summary edition of the MEX podcast, where Alex Guest and I discuss more sessions from the conference.

MEX was made possible by our expert speakers and workshop leaders: Rachel Liu, Giles Colborne, Thomas Foster, Fu Ho Lee, Peter Law, Tom Pursey, Patrizia Bertini, Apala Lahiri Chavan, Ed Rex, Aaron Garner, Emily Tulloh, Jonathan Chippindale, Ana Moutinho, Rob Graham, Alexa Shoen, Jonathan Lovatt-Young and Alex Guest; partners Brunel University and Wallacespace; and, of course, our sponsoring patrons Flying Object, Human Factors International and Tribal Worldwide. Thanks to all for their support.

Please get in touch if you’re interested in speaking, sponsoring and participating at future MEX events.

[…] addition to the podcast, there is also a written summary of 5 insights from the […]

[…] of the MEX community. For instance, cx partners – whose founder Giles Colborne spoke at MEX/16 – recently posted about a project they’ve completed for Google, looking at the link […]

[…] Summary of other talks at the MEX/16 conference […]

[…] Summary from MEX/16: new skills for a changing world of experience design […]

[…] our recent podcast interviews and conference sessions (e.g.Nathan Benaich and Ed Rex), I’ve become more aware of how the intricacies of machine […]